As hyperscalers prepare to invest billions in new artificial intelligence data centers, DRAM chip prices have surged approximately seven times over the past year, according to market data from TrendForce. While discussions about AI infrastructure costs typically center on Nvidia and graphics processing units, memory orchestration has emerged as a critical factor that will determine which companies can operate profitably in the evolving AI landscape.

The rapid price increase in DRAM chips comes at a time when efficient memory management is becoming essential for AI operations. Companies that master the discipline of directing data to the right agent at the right time will be able to process queries with fewer tokens, potentially making the difference between profitable operations and business failure.

Understanding AI Memory Management Challenges

Semiconductor analyst Doug O’Laughlin recently explored the growing importance of memory chips in AI infrastructure on his Substack, featuring insights from Val Bercovici, chief AI officer at Weka. Their discussion highlighted how memory orchestration extends beyond hardware considerations to impact AI software architecture significantly.

Bercovici pointed to the expanding complexity of Anthropic’s prompt-caching documentation as evidence of the field’s evolution. According to Bercovici, what began as a simple pricing page six or seven months ago has transformed into what he described as “an encyclopedia of advice” on cache write purchases and timing tiers.

The Economics of Cache Optimization

The interview revealed that Anthropic offers customers different cache memory windows, with five-minute and one-hour tiers available for purchase. Drawing on cached data costs significantly less than processing new queries, creating substantial savings opportunities for companies that manage their cache windows effectively.

However, this optimization comes with technical challenges. Each new piece of data added to a query may displace existing information from the cache window, requiring careful planning and resource allocation. The complexity of these decisions underscores why memory management will be crucial for AI companies moving forward.

Market Opportunities in Memory Orchestration

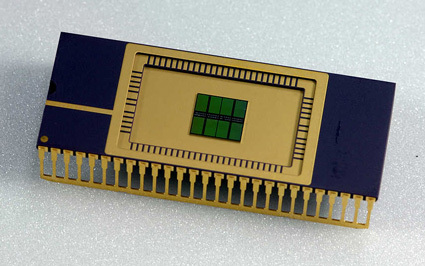

Multiple layers of the AI stack present opportunities for innovation in memory management. At the hardware level, data centers must decide when to deploy DRAM chips versus high-bandwidth memory, according to the discussion between O’Laughlin and Bercovici. Meanwhile, software developers work on higher-level optimizations, structuring model configurations to maximize shared cache benefits.

Additionally, specialized startups are emerging to address specific optimization challenges. In October, TechCrunch covered Tensormesh, a company focused on cache optimization that raised funding to help AI operations extract more inference capability from server loads.

Impact on AI Economics

Improved memory orchestration allows companies to reduce token usage, directly lowering inference costs. Combined with models becoming more efficient at processing individual tokens, these advances are pushing operational expenses downward across the industry.

In contrast to current constraints, falling server costs will make previously unviable AI applications economically feasible. This shift could enable new use cases and business models as the cost barrier to entry continues to decrease.

The semiconductor industry expects continued evolution in both hardware capabilities and software optimization techniques. As companies refine their approaches to AI memory management, the competitive landscape will likely favor organizations that successfully balance technical complexity with cost efficiency, though the timeline for widespread implementation remains uncertain.